AWS SageMaker: 7 Powerful Reasons to Use This Ultimate ML Tool

Ever wondered how companies like Netflix or Uber build smart, self-learning systems so fast? The secret often lies in AWS SageMaker—a game-changing platform that turns complex machine learning into something almost anyone can use. Let’s dive in and see why it’s a powerhouse.

What Is AWS SageMaker and Why It Matters

AWS SageMaker is Amazon’s fully managed service designed to help developers and data scientists build, train, and deploy machine learning (ML) models at scale. Unlike traditional ML workflows that require stitching together multiple tools and infrastructure, SageMaker streamlines the entire process—from data preparation to model deployment—into a single, cohesive environment.

Launched in 2017, SageMaker was Amazon Web Services’ bold answer to the growing complexity and fragmentation in the machine learning ecosystem. It emerged at a time when organizations were struggling to move ML projects from experimentation to production. By offering a unified platform, AWS SageMaker dramatically reduced the time and expertise needed to go from idea to deployment.

Core Components of AWS SageMaker

At its heart, AWS SageMaker is built around several integrated components that handle different stages of the ML lifecycle. These include SageMaker Studio (the web-based IDE), SageMaker Notebooks (Jupyter-based environments), SageMaker Training, SageMaker Hosting, and SageMaker Pipelines for workflow automation.

- SageMaker Studio: A unified visual interface for every step of ML development.

- SageMaker Notebooks: Fully managed Jupyter notebooks with pre-installed ML libraries.

- SageMaker Experiments: Track and compare different model iterations.

These components work together seamlessly, allowing users to focus on model logic rather than infrastructure management. For example, you can start with a notebook, train a model using built-in algorithms, and deploy it as a real-time endpoint—all without leaving the SageMaker ecosystem.

How AWS SageMaker Simplifies Machine Learning

One of the biggest challenges in ML is the so-called “last mile” problem—getting models out of the lab and into production. AWS SageMaker tackles this by automating many of the tedious and error-prone steps involved in model training and deployment.

For instance, SageMaker automatically handles instance provisioning, distributed training, and model scaling. It also integrates with AWS security and monitoring tools, ensuring compliance and observability from day one. This level of automation is especially valuable for teams without dedicated ML engineers.

“SageMaker reduces the undifferentiated heavy lifting in machine learning, allowing you to focus on what matters: building better models.” — AWS Official Documentation

Key Features That Make AWS SageMaker Stand Out

What truly sets AWS SageMaker apart from other ML platforms is its depth of features and tight integration with the broader AWS ecosystem. Whether you’re a beginner or a seasoned ML practitioner, SageMaker offers tools that adapt to your skill level and project needs.

Automated Model Training and Hyperparameter Tuning

Training a machine learning model often involves tweaking dozens of hyperparameters—learning rates, batch sizes, optimizer types—to get the best performance. Doing this manually is time-consuming and inefficient.

AWS SageMaker addresses this with its Automatic Model Tuning (also known as hyperparameter optimization or HPO). It uses advanced search strategies like Bayesian optimization to explore the hyperparameter space and find the best combination. You simply define the range of values, and SageMaker runs multiple training jobs in parallel to identify the optimal settings.

This feature can reduce model development time from weeks to days, especially for deep learning models with complex architectures. It’s particularly useful for teams experimenting with different model configurations or tuning large neural networks.

Built-in Algorithms and Pre-Trained Models

Not every team has the resources to build models from scratch. That’s where SageMaker’s library of built-in algorithms comes in. These are high-performance, scalable implementations of common ML algorithms such as XGBoost, K-Means, Linear Learner, and Random Cut Forest (for anomaly detection).

These algorithms are optimized for AWS infrastructure and can handle large datasets efficiently. They’re also accessible via the SageMaker SDK, making it easy to integrate them into your workflows. For example, you can train an XGBoost model on terabytes of data using distributed training without writing low-level code.

In addition, AWS SageMaker supports JumpStart, a feature that provides access to hundreds of pre-trained models and solution templates for common use cases like fraud detection, document processing, and forecasting. JumpStart allows you to deploy state-of-the-art models with just a few clicks, accelerating time-to-value.

Real-Time and Batch Inference Capabilities

Once a model is trained, it needs to make predictions—either in real time (e.g., for recommendation engines) or in batches (e.g., for nightly data processing). AWS SageMaker supports both modes seamlessly.

For real-time inference, SageMaker deploys models as RESTful endpoints that can handle thousands of requests per second. These endpoints automatically scale based on traffic and can be secured using AWS Identity and Access Management (IAM) and Virtual Private Cloud (VPC) configurations.

For batch inference, SageMaker offers Batch Transform, which allows you to run predictions on large datasets without needing a persistent endpoint. This is ideal for scenarios like generating monthly customer risk scores or processing historical logs.

Both inference types are monitored through Amazon CloudWatch, providing visibility into latency, error rates, and resource utilization.

AWS SageMaker Studio: The Ultimate ML Workbench

If AWS SageMaker is the engine, then SageMaker Studio is the cockpit. Introduced in 2020, SageMaker Studio is the first fully integrated development environment (IDE) for machine learning. It brings together notebooks, experiments, data labeling, debugging, and deployment into a single, browser-based interface.

Think of it as the “Visual Studio Code” of machine learning—but purpose-built for data science workflows. With SageMaker Studio, you can write code, visualize data, track experiments, debug models, and collaborate with teammates—all from one place.

Unified Interface for End-to-End ML Development

Before SageMaker Studio, ML development was fragmented. Data scientists used Jupyter notebooks for coding, spreadsheets or databases for tracking experiments, and separate tools for model monitoring. This siloed approach made collaboration and reproducibility difficult.

SageMaker Studio solves this by unifying all these functions. When you open Studio, you see a dashboard that includes:

- Notebooks: Edit and run Jupyter notebooks with one-click access to compute instances.

- Experiments: View and compare model training runs with metrics like accuracy and loss.

- Models: Manage trained models and their deployment status.

- Pipelines: Design and monitor automated ML workflows.

This integration means you can go from data exploration to model deployment without switching tools or contexts. It also makes it easier to audit and reproduce results—critical for regulated industries like finance and healthcare.

Collaboration and Version Control in SageMaker

Machine learning is increasingly a team sport. SageMaker Studio supports collaboration through shared projects, user permissions, and integration with version control systems like AWS CodeCommit and GitHub.

Multiple team members can work on the same notebook or pipeline, with changes tracked and synchronized. You can also set up automated CI/CD pipelines using SageMaker Projects, which templates common ML workflows and connects to AWS CodePipeline for deployment automation.

For example, a data scientist can commit a new model version to a repository, triggering a pipeline that re-trains the model, runs tests, and deploys it to a staging environment—all without manual intervention.

“SageMaker Studio gives teams the tools to collaborate like software engineers, but for machine learning.” — AWS Blog

Scaling Machine Learning with SageMaker Pipelines

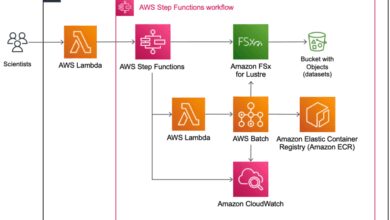

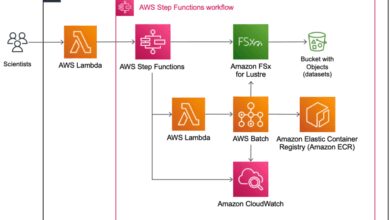

As ML projects grow, so does the need for automation and reproducibility. AWS SageMaker Pipelines is a CI/CD service specifically designed for machine learning workflows. It allows you to define, automate, and monitor the entire ML lifecycle as code.

Pipelines are built using the SageMaker Python SDK and can include steps for data preprocessing, model training, evaluation, and deployment. Each pipeline execution is versioned and logged, making it easy to audit changes and roll back if needed.

Building Reproducible ML Workflows

One of the biggest pain points in ML is the lack of reproducibility. A model that works in a local notebook might fail in production due to differences in data, code, or environment.

SageMaker Pipelines solves this by treating the entire workflow as infrastructure-as-code. You define each step in a pipeline using Python, and SageMaker ensures consistent execution every time. For example:

- Step 1: Ingest and clean data from Amazon S3.

- Step 2: Train a model using SageMaker Training.

- Step 3: Evaluate model performance against a baseline.

- Step 4: Deploy to production only if accuracy improves.

This approach ensures that every model deployment follows the same rigorous process, reducing the risk of errors and improving governance.

Automating Model Retraining and Deployment

Models don’t stay accurate forever. As real-world data changes, models can drift and lose performance. AWS SageMaker supports automated retraining through event-driven pipelines.

For example, you can set up a pipeline that triggers a retraining job every time new data is uploaded to an S3 bucket. You can also integrate with Amazon SageMaker Model Monitor to detect data drift and automatically initiate retraining when thresholds are exceeded.

This level of automation is crucial for maintaining model quality in dynamic environments like e-commerce recommendation systems or fraud detection engines.

Security, Governance, and Compliance in AWS SageMaker

Machine learning systems often handle sensitive data—customer information, financial records, health data. As such, security and compliance are non-negotiable. AWS SageMaker provides robust tools to ensure your ML workflows meet enterprise-grade standards.

Data Encryption and Access Control

All data in AWS SageMaker is encrypted by default—both at rest and in transit. You can use AWS Key Management Service (KMS) to manage encryption keys and enforce granular access policies.

Access to SageMaker resources is controlled through IAM roles and policies. For example, you can restrict who can create training jobs, deploy models, or access notebooks. You can also integrate with AWS Single Sign-On (SSO) for centralized identity management.

Additionally, SageMaker supports VPC integration, allowing you to isolate ML workloads in a private network and control outbound traffic using security groups and NACLs.

Audit Logging and Compliance Monitoring

To meet regulatory requirements (like GDPR, HIPAA, or SOC 2), organizations need detailed audit trails. AWS SageMaker integrates with AWS CloudTrail to log all API calls—such as model deployments or notebook launches—providing a complete record of activity.

You can also use Amazon CloudWatch to monitor system performance and set up alerts for unusual behavior. For example, if a training job suddenly consumes excessive resources, you can trigger an alert to investigate potential misuse.

These capabilities make SageMaker a preferred choice for industries with strict compliance needs, such as banking, insurance, and healthcare.

Cost Management and Optimization in AWS SageMaker

While AWS SageMaker simplifies ML development, costs can add up quickly—especially with large-scale training jobs or always-on endpoints. Understanding how SageMaker pricing works and applying cost optimization strategies is essential for sustainable ML operations.

Understanding SageMaker Pricing Model

AWS SageMaker uses a pay-as-you-go pricing model based on the resources you consume. Key cost components include:

- Notebook Instances: Billed per hour based on instance type (e.g., ml.t3.medium, ml.p3.2xlarge).

- Training Jobs: Charged based on instance type and duration (e.g., $1.20/hour for ml.p3.2xlarge).

- Inference Endpoints: Real-time endpoints are billed per hour for instance usage plus data transfer fees.

- Batch Transform: Charged based on the number of instances and processing time.

There’s no upfront cost or minimum fee, which makes it accessible for startups and small teams. However, costs can escalate if resources are left running unnecessarily.

Strategies for Reducing SageMaker Costs

To optimize costs, consider the following best practices:

- Use Spot Instances for Training: SageMaker supports EC2 Spot Instances for training jobs, which can reduce costs by up to 90% compared to on-demand instances.

- Auto-Shutdown Notebooks: Configure idle notebook instances to stop automatically after a set period (e.g., 15 minutes).

- Right-Size Inference Endpoints: Use auto-scaling and choose instance types that match your workload (e.g., ml.c5.large instead of ml.p3.2xlarge for lightweight models).

- Leverage SageMaker Serverless Inference: For unpredictable workloads, serverless inference automatically provisions and scales compute, charging only per request and duration.

By combining these strategies, teams can significantly reduce their SageMaker bills without sacrificing performance.

Real-World Use Cases of AWS SageMaker

AWS SageMaker isn’t just a theoretical platform—it’s being used by companies across industries to solve real business problems. From healthcare to retail, SageMaker enables organizations to harness the power of machine learning at scale.

Fraud Detection in Financial Services

Banks and fintech companies use SageMaker to detect fraudulent transactions in real time. By training models on historical transaction data, they can identify suspicious patterns—like unusual spending behavior or location jumps.

For example, a major European bank built a fraud detection system using SageMaker’s Random Cut Forest algorithm and deployed it as a real-time endpoint. The system analyzes transactions in milliseconds and flags high-risk ones for review, reducing false positives by 40%.

Personalized Recommendations in E-Commerce

Online retailers use SageMaker to power recommendation engines that drive customer engagement and sales. By analyzing user behavior, purchase history, and product attributes, models can suggest relevant items to each shopper.

One global e-commerce platform used SageMaker to build a deep learning-based recommendation system. They trained the model using SageMaker’s distributed training capabilities and deployed it with auto-scaling to handle peak traffic during holiday sales.

Predictive Maintenance in Manufacturing

Manufacturers use SageMaker to predict equipment failures before they happen. By analyzing sensor data from machines, models can detect early signs of wear and tear, enabling proactive maintenance.

A leading automotive manufacturer implemented a predictive maintenance solution using SageMaker. They trained models on vibration, temperature, and pressure data, reducing unplanned downtime by 30% and saving millions in repair costs.

Getting Started with AWS SageMaker: A Step-by-Step Guide

Ready to try AWS SageMaker yourself? Here’s a simple roadmap to get you started, whether you’re a beginner or an experienced developer.

Setting Up Your SageMaker Environment

1. Sign in to the AWS Management Console and navigate to SageMaker.

2. Create a SageMaker Studio domain or launch a Jupyter notebook instance.

3. Choose an instance type (start with ml.t3.medium for learning).

4. Attach an IAM role with necessary permissions (SageMakerFullAccess, S3 access).

5. Open the notebook and install required libraries (e.g., pandas, scikit-learn, SageMaker SDK).

You can follow AWS’s official Getting Started Guide for detailed instructions.

Training Your First Model

1. Upload a dataset to Amazon S3 (e.g., a CSV file with customer data).

2. Load the data into your notebook using pandas.

3. Preprocess the data (handle missing values, encode categories).

4. Use SageMaker’s built-in XGBoost algorithm to train a classification model.

5. Deploy the model as a real-time endpoint.

6. Test predictions using sample data.

AWS provides sample notebooks in SageMaker Studio to help you through each step. You can also explore SageMaker JumpStart for pre-built models and templates.

Best Practices for Long-Term Success

As you grow with SageMaker, keep these best practices in mind:

- Version Your Data and Models: Use SageMaker Experiments and Model Registry to track changes.

- Automate Where Possible: Use SageMaker Pipelines for repeatable workflows.

- Monitor Model Performance: Enable SageMaker Model Monitor to detect drift.

- Optimize Costs: Use spot instances, auto-shutdown, and serverless inference.

By following these practices, you’ll build a scalable, maintainable ML practice on AWS SageMaker.

What is AWS SageMaker used for?

AWS SageMaker is used to build, train, and deploy machine learning models at scale. It’s commonly used for tasks like fraud detection, recommendation engines, predictive maintenance, and natural language processing. Its fully managed infrastructure makes it ideal for both beginners and enterprises.

Is AWS SageMaker free to use?

AWS SageMaker offers a free tier for new users, including 250 hours of t2.medium or t3.medium notebook instances per month for the first two months. However, most features are pay-as-you-go, and costs depend on resource usage like compute time and storage.

How does SageMaker compare to Google Vertex AI or Azure ML?

SageMaker offers deeper integration with its cloud ecosystem (AWS), more built-in algorithms, and stronger support for MLOps via SageMaker Pipelines. While Google Vertex AI excels in ease of use and Azure ML integrates well with Microsoft tools, SageMaker is often preferred for its scalability and enterprise features.

Can I use SageMaker for deep learning?

Yes, AWS SageMaker fully supports deep learning with frameworks like TensorFlow, PyTorch, and MXNet. It provides optimized Docker images, distributed training, and GPU-powered instances to handle large neural networks efficiently.

Do I need to know coding to use AWS SageMaker?

While SageMaker is code-centric (using Python and Jupyter notebooks), it also offers low-code options via SageMaker Studio and JumpStart. Beginners can use pre-built models and templates, while advanced users can customize every aspect of the ML pipeline.

In summary, AWS SageMaker is more than just a tool—it’s a complete ecosystem for machine learning. From its intuitive Studio interface to powerful automation and security features, it empowers teams to innovate faster and deploy models with confidence. Whether you’re building your first ML model or scaling a global AI system, SageMaker provides the foundation you need to succeed.

Recommended for you 👇

Further Reading: